Title: Mastering the Fundamentals: Big Data Basics Exam

Section 1: Introduction to Big Data

Question 1:

Define Big Data and explain its three key characteristics.Answer:

Big Data refers to vast volumes of structured, semistructured, and unstructured data that inundates a business on a daytoday basis. Its three key characteristics are:1.

Volume

: Big Data involves large volumes of data, often exceeding the capacity of traditional database systems.2.

Velocity

: Data streams in at an unprecedented speed and must be processed swiftly to gain timely insights.3.

Variety

: Big Data encompasses diverse types of data, including structured data from traditional databases, semistructured data like XML files, and unstructured data like text documents and social media posts.

Question 2:

What are the primary challenges associated with Big Data?Answer:

The primary challenges associated with Big Data include:1.

Storage

: Storing large volumes of data costeffectively and efficiently.2.

Processing

: Processing data quickly to extract insights in realtime.3.

Analysis

: Analyzing diverse types of data to derive meaningful insights.4.

Privacy and Security

: Ensuring the privacy and security of sensitive data.5.

Quality

: Maintaining the quality and accuracy of data amidst its vastness and variety.Section 2: Big Data Technologies

Question 3:

Explain the role of Hadoop in Big Data processing.Answer:

Hadoop is an opensource framework that facilitates the distributed processing of large datasets across clusters of computers using simple programming models. Its core components include:1.

Hadoop Distributed File System (HDFS)

: A distributed file system that stores data across multiple machines.2.

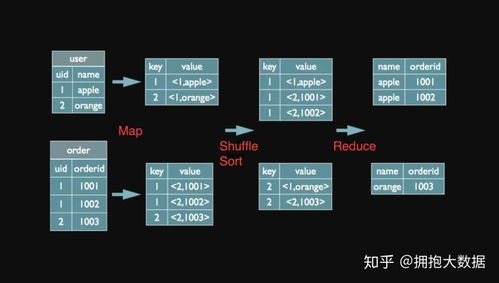

MapReduce

: A programming model for processing and generating large datasets in parallel.3.

YARN (Yet Another Resource Negotiator)

: A resource management layer that schedules tasks and manages resources in Hadoop clusters.Hadoop enables scalable, reliable, and efficient processing of Big Data by distributing data and computation across clusters of commodity hardware.

Question 4:

Describe the role of Apache Spark in Big Data analytics.Answer:

Apache Spark is an opensource, distributed computing system that provides an interface for programming entire clusters with implicit data parallelism and fault tolerance. Its key features include:1.

Speed

: Spark performs inmemory computations, making it faster than Hadoop's MapReduce for certain applications.2.

Ease of Use

: Spark offers APIs in Java, Scala, Python, and R, making it accessible to a wide range of developers.3.

Versatility

: Spark supports a variety of workloads, including batch processing, realtime streaming, machine learning, and graph processing.Spark's flexibility and speed make it a popular choice for Big Data analytics tasks requiring iterative processing and interactive queries.

Section 3: Data Management and Analysis

Question 5:

Explain the concept of data warehousing and its significance in Big Data analytics.Answer:

Data warehousing involves the process of collecting, storing, and managing large volumes of data from various sources to support business decisionmaking. Its significance in Big Data analytics lies in:1.

Centralized Storage

: Data warehouses consolidate data from multiple sources into a single repository, enabling easier access and analysis.2.

Historical Analysis

: Data warehouses store historical data, allowing organizations to perform trend analysis and make informed decisions based on past performance.3.

Business Intelligence

: Data warehouses facilitate the extraction of actionable insights through tools like reporting, data mining, and online analytical processing (OLAP).4.

Data Quality

: By integrating and cleansing data, data warehouses improve the quality and consistency of data used for analysis.Question 6:

Discuss the importance of data visualization in Big Data analytics.Answer:

Data visualization is crucial in Big Data analytics for the following reasons:1.

Insight Discovery

: Visual representations of data make it easier to identify patterns, trends, and correlations that may not be apparent from raw data.2.

Communication

: Visualizations help communicate complex information and insights to stakeholders in a clear and understandable manner.3.

Decision Making

: Visualizations enable decisionmakers to quickly grasp key insights and make informed decisions based on data.4.

Exploratory Analysis

: Interactive visualizations allow users to explore data dynamically, uncovering new insights and hypotheses.5.

Storytelling

: Visualizations can be used to tell compelling stories with data, engaging audiences and driving action.In conclusion, mastering the fundamentals of Big Data is essential for navigating the complexities of data management, processing, and analysis in today's datadriven world. By understanding the core concepts, technologies, and best practices outlined in this exam, individuals can effectively harness the power of Big Data to drive innovation, make datadriven decisions, and gain a competitive edge in their respective fields.

标签: 大数据技术基础考题及答案 大数据试题 大数据基础考证 大数据应用基础期末考试

还木有评论哦,快来抢沙发吧~