Title: Establishing an Efficient Big Data Operations Framework

In today's digital landscape, big data has become the cornerstone of many industries, driving decisionmaking processes, optimizing operations, and fostering innovation. However, harnessing the power of big data requires a robust operational framework to manage, process, and analyze vast amounts of information effectively. Let's delve into the key components of a comprehensive big data operations system and explore best practices for its implementation.

Understanding Big Data Operations

Big data operations encompass a range of activities aimed at ensuring the smooth functioning of datarelated processes within an organization. This includes data collection, storage, processing, analysis, and visualization. A welldesigned big data operations framework facilitates the extraction of actionable insights from complex datasets, enabling businesses to make informed decisions and gain a competitive edge.

Key Components of a Big Data Operations Framework

1.

Data Acquisition and Ingestion:

Implement robust mechanisms for collecting data from diverse sources, including sensors, social media, enterprise systems, and IoT devices.

Utilize data ingestion tools like Apache Kafka, Flume, or custom solutions to ingest streaming and batch data efficiently.

2.

Data Storage:

Choose scalable and reliable storage solutions capable of handling the volume, velocity, and variety of big data, such as Hadoop Distributed File System (HDFS), Amazon S3, or Google Cloud Storage.

Implement data partitioning and replication strategies to ensure data availability and fault tolerance.

3.

Data Processing:

Leverage distributed processing frameworks like Apache Hadoop, Apache Spark, or Apache Flink for parallel processing of large datasets.

Optimize data processing workflows to minimize latency and maximize throughput, considering factors like data locality and resource utilization.

4.

Data Analysis and Machine Learning:

Employ data analytics platforms such as Apache Hive, Apache Pig, or Presto for querying and analyzing structured and semistructured data.

Integrate machine learning frameworks like TensorFlow, scikitlearn, or PyTorch for building predictive models and extracting valuable insights from data.

5.

Data Governance and Security:

Establish policies and procedures to ensure data quality, integrity, and compliance with regulatory requirements.

Implement access control mechanisms, encryption, and auditing capabilities to protect sensitive data from unauthorized access and data breaches.

6.

Monitoring and Performance Optimization:

Deploy monitoring tools like Apache Ambari, Prometheus, or ELK stack to track system health, performance metrics, and resource utilization.

Continuously optimize the big data infrastructure based on performance bottlenecks and emerging requirements to maintain scalability and efficiency.

Best Practices for Implementing Big Data Operations

1.

Start with a Clear Strategy:

Define your organization's objectives, data requirements, and desired outcomes before embarking on big data initiatives. Align your operational framework with business goals to maximize value.

2.

Embrace Automation:

Leverage automation tools and DevOps practices to streamline deployment, configuration, and management tasks, reducing manual effort and minimizing errors.3.

Ensure Scalability and Flexibility:

Design your big data infrastructure to scale horizontally to accommodate growing data volumes and evolving business needs. Embrace cloudbased solutions for elasticity and ondemand resource provisioning.4.

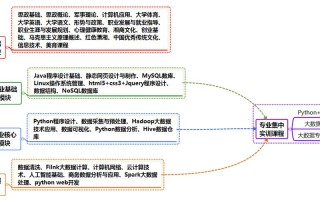

Invest in Talent and Training:

Build a team of skilled professionals with expertise in big data technologies, data engineering, and data science. Provide ongoing training and development opportunities to keep pace with technological advancements.5.

Promote Collaboration and Knowledge Sharing:

Foster a culture of collaboration between data engineers, data scientists, and business stakeholders to drive innovation and facilitate crossfunctional insights.6.

Iterate and Improve:

Continuously monitor system performance, user feedback, and industry trends to identify areas for improvement. Embrace an iterative approach to refine your big data operations framework and adapt to changing requirements.In conclusion, establishing an efficient big data operations framework is essential for organizations looking to unlock the full potential of their data assets. By incorporating key components such as data acquisition, storage, processing, analysis, governance, and optimization, and following best practices for implementation, businesses can harness the power of big data to drive growth, innovation, and competitive advantage.

Remember, the journey to effective big data operations is ongoing. Stay agile, stay informed, and continuously strive for excellence in managing and leveraging your data resources.

标签: 大数据运维工程师 大数据运维工作内容 大数据运维和系统运维的区别

评论列表

精准应对数据安全与高效运营