Title: Building a Scalable Web Crawling System for Big Data Projects

In today's digital landscape, harnessing the power of big data is crucial for businesses and organizations to gain insights, make informed decisions, and stay competitive. Web crawling, a fundamental component of many big data projects, involves extracting data from the vast expanse of the internet. Here, we'll delve into the intricacies of building a robust and scalable web crawling system tailored for big data projects.

Understanding the Basics

What is Web Crawling?

Web crawling, also known as web scraping, is the process of automatically gathering information from websites. It involves fetching web pages, extracting relevant data, and storing it for further analysis.

Importance of Scalability

Scalability is paramount in big data projects as they deal with massive volumes of data. A scalable web crawling system should efficiently handle increasing data loads without sacrificing performance.

Components of a Web Crawling System

1. Crawler

The crawler, or web spider, is responsible for fetching web pages. It traverses the web, following hyperlinks and downloading content. For big data projects, consider using distributed crawlers capable of parallel processing to expedite data retrieval.

2. Parser

Once the crawler fetches web pages, the parser extracts structured data from the raw HTML. Parsing algorithms must be robust to handle variations in page layouts and HTML structures across different websites.

3. Data Storage

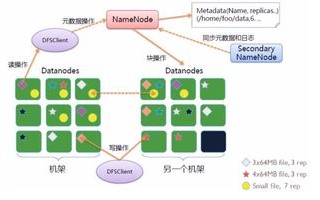

Efficient storage is critical for handling large volumes of crawled data. Choose a scalable database or distributed file system capable of storing and retrieving data rapidly. Options include Apache Hadoop HDFS, Apache Cassandra, or Amazon S3.

4. Deduplication

Web pages often contain duplicate content, which can inflate storage costs and skew analysis results. Implement deduplication mechanisms to identify and eliminate duplicate data during the crawling process.

5. Monitoring and Maintenance

Continuous monitoring is essential to ensure the crawling system operates smoothly. Monitor for errors, performance bottlenecks, and changes in website structures. Regular maintenance, such as updating parsers to adapt to site changes, is crucial for longterm reliability.

Best Practices for Building a Scalable Web Crawling System

1. Distributed Architecture

Employ a distributed architecture to distribute crawling tasks across multiple machines or nodes. This approach enhances scalability and fault tolerance by mitigating the risk of a single point of failure.

2. Rate Limiting and Politeness

Respect robots.txt directives and implement ratelimiting mechanisms to prevent overwhelming web servers with excessive requests. Adhering to politeness guidelines maintains the integrity of the crawling process and fosters positive relationships with website owners.

3. Intelligent Scheduling

Prioritize crawling tasks based on factors such as freshness, importance, and bandwidth constraints. Implement intelligent scheduling algorithms to optimize crawling efficiency and resource utilization.

4. Fault Tolerance and Retry Mechanisms

Design the crawling system with builtin fault tolerance mechanisms to handle network errors, server timeouts, and other unforeseen issues. Implement retry logic to reattempt failed requests and ensure robustness in adverse conditions.

5. Scalable Infrastructure

Scale the infrastructure horizontally by adding more servers or nodes as data volumes grow. Leverage cloud computing services for ondemand scalability and costeffectiveness, allowing the system to adapt to fluctuating workloads seamlessly.

Conclusion

Building a scalable web crawling system for big data projects requires careful consideration of various components and best practices. By leveraging distributed architectures, intelligent scheduling algorithms, and scalable infrastructure, organizations can effectively gather, process, and analyze vast amounts of data from the web. Implementing these strategies ensures the reliability, efficiency, and scalability of the web crawling process, empowering businesses to extract valuable insights and drive datadriven decisionmaking.

I've outlined the key components of a scalable web crawling system for big data projects, along with best practices to ensure efficiency and reliability. Let me know if you need more detailed information on any specific aspect!

标签: 大数据爬虫项目实训报告 大数据 爬虫 大数据爬虫项目作业

还木有评论哦,快来抢沙发吧~